Welcome to WordPress. This is your first post. Edit or delete it, then start writing!

Author: puntofisso

-

Vacuum tubes can be art

I rarely watch online videos that last more than 30 seconds. However, this time I couldn’t stop for the whole 17 minutes this video lasts.

It’s about a French ham radio operator who makes his own vacuum tubes. The great thing of this video is that it merges great technique (the guy does really know well what he’s doing) with an almost hypnotic jazz soundtrack. The elegant and delicate way he executes all the process is, in its own very nerdy way, totally artistic. No surprises, he’s French.

The making of the tubes is explained on his web-site. It’s in French, but Google Translates it pretty correctly.

Fabrication d’une lampe triode

Uploaded by F2FO. – Technology reviews and science news videos. -

Christmas Time

This blog, as usual, has been dormant for a while. I’m not one of those blogger who spit out everything passing through their minds but I generally like to report events, technologies, and research ideas that I’m really enjoying and understand.

So, let me deviate a little from my usual scope to report a little bit about myself and my expectations for the new year.

Firstly, in my day job, I was promoted from my previous post. I’ve been working for a year and a half at St George’s University of London as a Systems Developer and Administrator. Last July a colleague left, so I applied to take over his post of Senior Systems Analyst which I finally got in November. I’m now in charge of the mail and backup servers, and of taking care of the storage systems over our distributed network. Most interestingly, after having the chance of dealing with the implementation of our Common Research Information System using Symplectic, I’ve been able to initiate a couple of projects that I believe will greatly improve our services and our positioning as an educational institution in 2011:

– the development of a new process for service support, using Request Tracker

– the design, deployment, and marketing of a mobile portal.I believe that both projects will help – given the cuts we’ll be experiencing – improve the quality of our services and reach a wider audience. Internal behavioural changes will be needed and a lot of inter-departmental cooperation will be required to let everyone accept the changes and I’m already working on the advocacy sub-projects.

Secondly, 2010 has been a great year of Geo development. After starting to get interested in the topic a couple of years ago, I got in touch with some great people that are really helping me expand my knowledge and views. For 2011 I expect to increase my practical skills and manage to do some work in the area – the first opportunity is exactly our corporate mobile portal, which will have extensive location aware capabilities.

Eventually, as a photographer I finally managed to experiment some techniques like HDR and do some nature photography in the Salt Ponds of Margherita di Savoia. In 2011 I’m planning to do all I can to turn semi-pro, launching a photography website and organize my first theme exhibition in a local cafe. I created my own Christmas Cards this year, with the photo you see below: it’s a picture I took in Bologna, where I was living up to 2008, and it’s the Christmas Tree we have every year in the main square.

That’s all for the moment. Enjoy your holidays, whatever you wish to celebrate 🙂

-

The several issues of geo development: a chronicle of October's GeoMob

GeoMob has returned after a longer-than-usual hiatus due to other – and definitely very interesting – commitments of our previous Mr GeoMob, Christopher Osborne. It was a very interesting night with the usual format of four presentations covering aspects of research, development and business. Here’s my summary and comments.

I’m a bit unsure on how to comment the involvement of TweetDeck into the GeoSocial business.

Max’s presentation has been focused on the integration of their application with FourSquare. It’s a tightly coupled integration allowing users to follow their Twitter friends using the locative power of Foursquare, i.e. putting them on a map. Max gave out some bread for our brains when commenting that “Google Latitude is not good for us because it gives out location continuously, whereas we are looking for discrete placement of users on POIs“: this is a real example of why more-is-not-necessarily-better and, in my opinion, the main reason for which, to date, Latitude has been less successful in catalysing users’ attention on locative services.However, I’m not totally sure why TweetDeck foresees its future into becoming a platform to integrate Twitter and FourSquare into a single framework. “Other apps put FourSquare functions in a separate window and this is distasteful“. Is it really? And how exactly will TweetDeck benefit, financially but not only, from this integration? “We spent a lot of time on FourSquare integration but unfortunately it’s not much used“. They should ask themselves why.

Their TODO list includes Geofencing which might be interesting so let’s wait and see.Matthew Watkins, @mazwat – Chromaroma by Mudlark

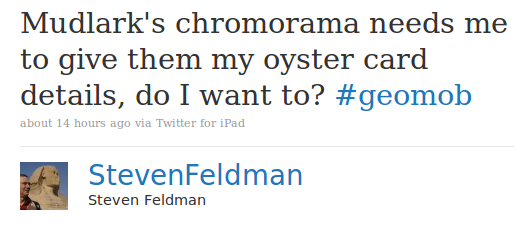

For those of you who don’t know it yet: Chromaroma is a locative game based on your Oyster card touch-ins and touch-outs. They’re still in closed alpha, but the (not so many?) lucky users (I’ve asked to join the alpha 3-4 times, but they never replied) can connect their Oyster account to the game and take part to some kind of Gowalla for transport, based on the number of journeys, station visited, personal and team targets.

Two things to be considered:

– open data and privacy: upon joining the service, the user account page is scraped for their journeys. Matthew explained they approached TfL to ask for APIs/free access to the journeys data but “due to budget cuts we’re low priority“. Apparently they’ve been allowed to keep on doing scraping. The obvious issue is a matter of trust: why should someone give their oyster account access to a company that, technically, hasn’t signed any agreement with TfL?

This is worrying, as to get journey history data you need to activate Auto Top-up. So you’re basically allowing a third party to access an account connected to automatic payments from your payment card.

This is worrying, as to get journey history data you need to activate Auto Top-up. So you’re basically allowing a third party to access an account connected to automatic payments from your payment card.

Secondly, I can’t understand TfL’s strategy on open data here: if they are not worried about the use Mudlark is doing of such data, why not providing developers with an API to query the very same data? Users’ consent can be embedded in the API, so I’m a bit worried that Chromaroma is actually exposing the lack of strategy by TfL, rather than their availability to work together with developers. I hope I’m wrong.

– monetising: I’m not scared of asking the very same question to any company working on this. What is Mudlark’s monetisation strategy and the business viability of such strategy? It can’t be simply “let’s build travel profiles of participating users and sell them to advertisers” as TfL would have done that already. And if TfL haven’t thought about this, or if they’re letting Mudlark collect such data without even letting them adhere to some basic T&C, we are in serious trouble. However, it’s the declared strategy by Mudlark that does not convince me. Matthew suggests it might be based on target like “get from Warren Street to Kings Cross by 10 am, show your touch-ins and get a free coffee” or on the idea of “sponsor items” you can buy. Does this strategy have a market that is big enough? And, as I’ve already asked, why should a company pay for this kind of advertisement that is potentially available for free? If the game is successful, however, it will be chaos in the Tube – and I’m really looking forward to it 🙂Oliver O’Brien, @oobr – UCL CASA Researcher

Oliver has recently had his 15 minutes of glory thanks to some amazing live map visualisation of London Barclays Cycle Hire availability. He went further to develop visualisation pages for different bicycle hire schemes all around the world – before he received a Cease&Desist request by one of the companies involved. As a researcher, he provided interesting insight to the GeoMob showing some geo-demographic analysis. For example, weekdays vs weekend usage patterns are different according to the area of the world involved. London is very weekdays-centric, showing that the bicycles are mainly used by commuters. I wonder if this analysis can provide also commercial insight as much as Chromaroma’s intended use of Oyster data.

Thumbs up for the itoworld-esque animation visualizing bike usage in the last 48 hours – stressing that properly done geo-infographic can be extremely useful for problem analysis. Oliver’s future work seems targeted at this, and ideally we’ll hear more about travel patterns and how they affect the usability of bicycle hire schemes. I can’t really understand why he was asked to take some of the maps down.

Eugene Tsyrklevich, @tsyrklevich – Parkopedia

The main lesson of this presentation: stalk your iPhone app users, find them on the web, question them and make them change the negative reviews.

An aggressive strategy that can probably work – and I would actually describe Parkopedia’s strategy as positively aggressive. They managed to get a deal with AA about branding their parking-space-finding-app in exchange for a share of profit.

Eugene’s presentation was more about business management than development. Nonetheless it was incredibly full of insight. Especially on how to be successful when marketing an iPhone application. “Working with known brands gives you credibility, and it opens doors“. The main door that this opened was actually Apple’s interest in featuring their app on the AppStore, leading to an almost immediate 30-fold increase in sales. This leads to further credibility and good sales: “Being featured gets you some momentum you never lose“. This is a good lesson for all our aspiring geo-developers. -

4G? No, really?

Vic Gundotra on Android 2.2:

- 2x-5x increase in speed (due to Just-in-time compilation)

- tethering and portable hotspot

- impressive voice recognition capabilities

- cloud/app communication with instant mobile/desktop synchronisation

- Adobe Flash (“It turns out that on the Internet, people use Flash.” is my favourite quote ever…)

Steve Jobs on iPhone 4G:

- You can play Farmville

Do I need to add anything more? 🙂

-

Cold thoughts on WhereCampEU

What a shame having missed last year’s WhereCamp. The first WhereCampEU, in London, was great and I really want to be part of such events more often.

WhereCampEU is the European version of this popular unconference about all things geo. It’s a nonplace where you meet geographers, geo-developers, geo-nerds, businesses, the “evil” presence of OrdnanceSurvey (brave, brave OS guys!), geo-services, etc.

I’d just like to write a couple of lines to thank everyone involved in the organisation of this great event: Chris Osborne, Gary Gale, John Fagan, Harry wood, Andy Allan, Tim Waters, Shaun McDonald, John Mckerrel, Chaitanya Kuber. Most of them were people I had actually been following on twitter for a while or whose blog are amongst the ones I read daily, some of them I had alread met in other meetups. However, it was nice to make eye-contact again or for the first time!

Some thoughts about the sessions I attended:

- Chris Osborne‘s Data.gov.uk – Maps, data and democracy. Mr Geomob gave an interesting talk on democracy and open data. His trust in democracy and transparency is probably quintessentially British, as in Italy I wouldn’t be that sure about openness and transparency as examples of democratic involvement (e.g. the typical “everyone knows things that are not changeable even when a majority don’t like them“). The talk was indeed mind boggling especially about the impact of the heavy deployment of IT systems to facilitate public service tasks: supposed to increase the level of service and transparency of such services, they had a strong negative impact on the perceived service level (cost and time).

- Gary Gale‘s Location, LB(M)S, Hype, Stealth Data and Stuff

and Location & Privacy; from OMG! to WTF?. Albeit including the word “engineering” in his job title, Gary is very good at giving talks that make his audience think and feel involved. Two great talks on the value of privacy wrt location. How much would you think your privacy is worth? Apparently, the average person would sell all of his or her location data for £30; Gary managed to spark controversy amidst uncontroversial claims that “£30 for all your data is actually nothing” – a very funny moment (some people should rethink their sense of value, when talking about UK, or at least postpone philosophical arguments to the pub). - Cyclestreet‘s Martin Lucas-Smith‘s Cyclestreets Cycle Routing: a useful service developed by two very nice and inspired guys, providing cycling route maps over OpenStreetMaps. Their strenght is that the routes are calculated using rules that mimick what cyclists do (their motto being “For cyclists, By cyclists“). Being a community service, they tried (and partially managed) to receive funding by councils. An example of an alternative – but still viable – business model.

- Steven Feldman‘s Without a business model we are all fcuk’d. Apart from the lovely title, whoever starts a talk saying “I love the Guardian and hate Rupert Murdoch” gains my unconditional appreciation 🙂 Steven gave an interesting talk on what I might define “viable business model detection techniques“. As in a “business surgery” he let some of the people in the audience (OrdnanceSurvey, cyclestreetmaps, etc…) analyze their own business and see weaknesses and strenghts. A hands-on workshop that I hope he’s going to repeat at other meetings.

- OpenStreetMap: a Q&A session with a talk from Simone Cortesi (that I finally managed to meet in person) showing that OSM can be a viable and profitable business model. Even stressing that they are partially funded by Google.

Overall level of presentations: very very good, much better organised than I was expecting. Unfortunately I missed the second day, due to an untimely booked trip 🙂

Maybe some more involvement from big players would be interesting. Debating face to face about their strategy, especially when the geo-community is (constructively) critical on them, would benefit everyone.

I mean, something slightly more exciting than a bunch of Google folks using a session to say “we are not that bad” 🙂