Disclaimer: This is a dashboard/notepad-like stream of ideas and questions, rather than a proper blog post 🙂

WherecampEU, Berlin

An amazing time with some of the best minds around. Some points I’d like to put down and think about later:

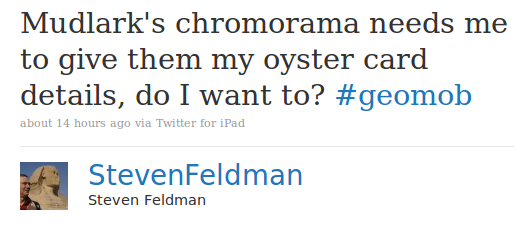

1) Ed Parsons (@edparsons) run a very interactive session about what kind of open data developers expect from public authorities and companies. One of the questions asked was “would you pay to get access to open data?“. This issue has long been overlooked. Consider for a moment just public authorities: they are non-profit entities. Attaching an open license to data is quick and cheap. Maintaining those data and making them accessible to everyone is not. As developers and activists we need to push the Government to publish as many data they can. However, we want data to be sustainable. We don’t want to lose access to data for lack of resources (think about Tfl’s TrackerNet). Brainstorming needed…

2) Gary Gale (@vicchi) and his session on mapping as a democratic tool can be reduced to a motto: We left OS times, we are in OSM times. Starting from the consideration we don’t talk about addresses but about places, part of the talk was dedicated to the effort Gary and others are putting into defining a POI standard. The idea is to let the likes of Foursquare, Gowalla, Facebook Places, etc…, store their places in a format that makes importing and exporting easy. Nice for neogeographers like us, but does the market really want it? Some big players are part of the POI WG, some are not.

3) I really enjoyed the sessions on mobile games, especially the treasure hunt run by Skobbler. However, some of these companies seem to suffer from the “yet another Starbucks voucher” syndrome. I’m sure that vouchers and check-ins can be part of a business plan, but when asked how they intend to monetize their effort some of these companies reply with a standard “we have some ideas, we are holding some meetings“. Another issue that needs to be addressed carefully – and that seems to be a hard one – is how to ensure that location is reported accurately and honestly. It doesn’t take Al Capone to understand you can easily cheat on your location and that when money are involved things can get weird.

4) It was lovely to see Nokia and Google on the same stage. Will it translate in some cooperation, especially with respect to point 2)?

5) I can’t but express my awe at what CASA are working on. Ollie‘s maps should make it to the manual for every public authority’s manager: they are not just beautiful, but they make concepts and problem analysis evident and easy to be appreciated by people who are not geo-experts. And by the way, Steven‘s got my dream job, dealing with maps, data, and RepRap 😀

6) Mark run a brainstorming session about his PhD topic: how to evaluate trust in citizen reported human crisis reports. This is a very interesting topic, and he reports about it extensively on his blog. However, I’m not sure this question can have a single answer. What I feel is that different situations might require different models of trust evaluation, to the point that each incident could be so peculiar that even creating categories of crisis would result impossible. Mark’s statistical stance on starting his work might return an interesting analysis. I’m looking forward to see how things develop.

7) Martijn‘s talk about representing history in OpenStreetMap exposes a big problem: how to deal with the evolution of a map. This is important from two points of view: tracing down errors, and representing history. This problem requires a good brainstorming session, too 🙂

8) Can’t help but praise Chris Osborne for his big data visualisation exposing mcknut‘s personal life 🙂 And also for being the best supplier of quotes of the day and a great organiser of this event, as much as Gary.

What didn’t quite work

Just a couple of things, actually:

1 – live code presentations are doomed, as Gary suggested. They need better preparation and testing.

2 – no talk should start with “I’ve just put these things together”. Despite this being an unconference, that shouldn’t mean you want to show something bad quality. Or anyway give that impression.

Me wantz

Next time I wish to have:

1 – PechaKucha-style lightening presentations on day 1, to help people understand what sessions they want to attend

2 – similarly to point 1, a wiki with session descriptions and, upon completion, comments, code, slides, etc…

3 – a hacking/hands on/workshop session, on the model of those run at #dev8d.